def. Filtration on a probability space is where filtration is an increasing collection of -algebras where:

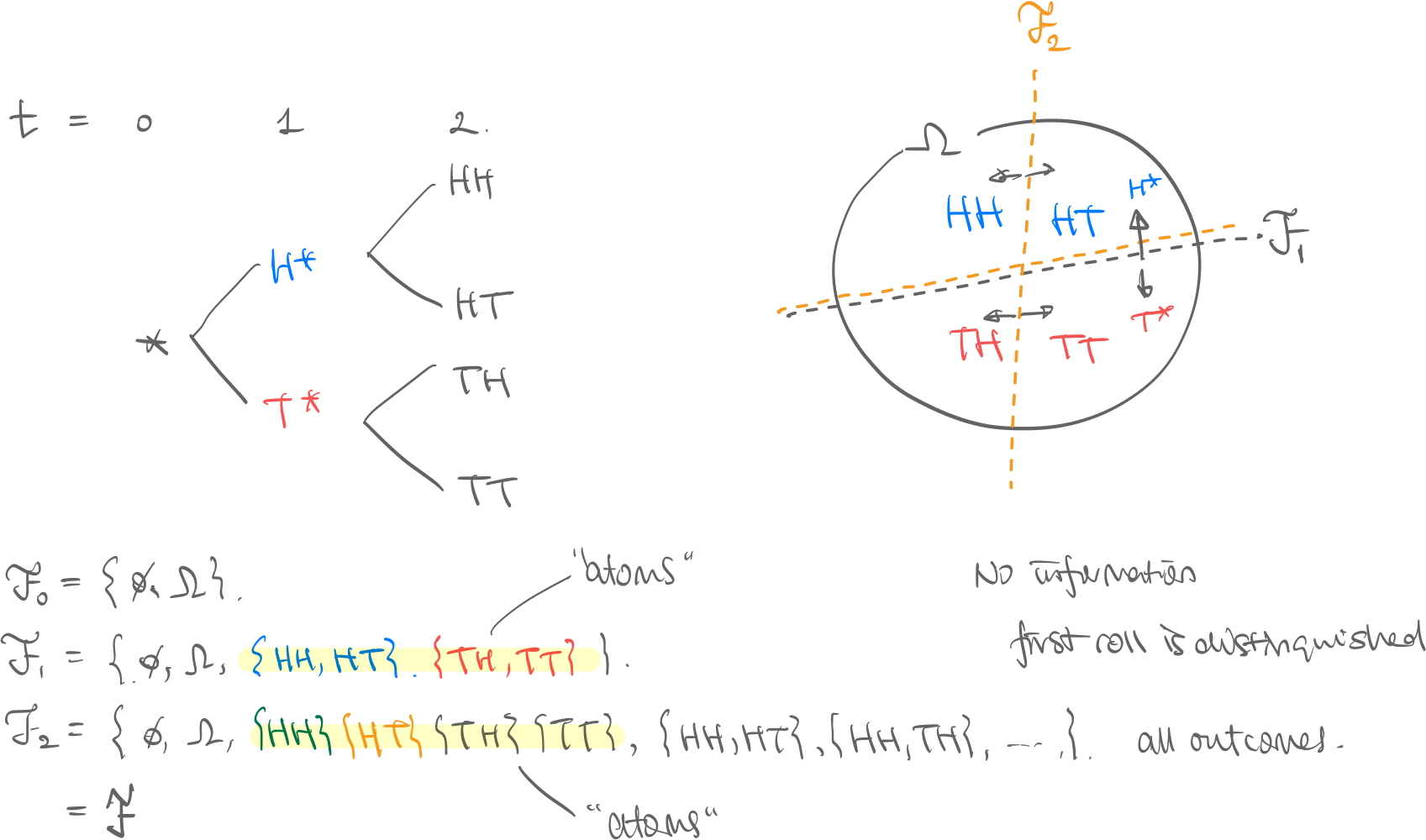

Example. Consider a two-step coin-flip.

- are the final future outcomes, HH, HT, TH, or TT.

- “cut” the outcomes in half, and now we know if we’re in the blue universe (first roll is heads) or the red universe (first roll is tails)

- “cut” the outcomes again into quarters, and now we know specifically what happened.

- Visualization. See the pie cutting diagram. Obviously, because if we know the final outcome, we know if the first roll was heads or tails. Rigorously, since -algebras need to be closed under union, all “lower resolution” events are contained within the “higher resolution” event.

def. Adapted process = Natural Filtration. Process is adapted to filtration if is -measruable for every . Or vice versa, the filtration is the natural filtration of the process. Example. In the above coin-flip example, let random variables be:

Then we can easily see is -measurable, and that this process is adapted to the filtration (=filtration is the natural filtration for process ).

thm. From the adapted=natural process-filtration definition and the filtration definition, we have that filtration :

- ! , because the former just knows about the current , and the latter knows about all the past thus . This becomes important in the Markov property. def. Time Series is a stochastic process indexed by discrete (integer) time points.

- See discussion: Is a time series the same as a stochastic process? - Cross Validated Example. Process where it is sum of the sequence of random variables: :

Memoryless, True Fair-Game

def. Martingale. A process is a martingale iff

Intution. True Fair-game process is a martingale. This doesn’t mean fair game in that when you begin the game and there is no unfair advantage/disadvantage (false fair-game), but that it is a fair game at every single point in time where you roll.

def. Standard Markov. A process is a Markov process iff for

Intuition. Path-agnostic process is Markov. This means that giving the path of how you got to current point doesn’t matter to how the future behaves. Motivation. A process being a Markov process is a really powerful statement since we can use the relatively computationally less complex tools of Markov Chains for analysis. thm. If for process , for every , holds then it is a Markov process. , is not trivial, because natural filtration contains full past history. This property holding means that current state encodes the past state completely, which is not necessarily true. See the Random walk with drift example.

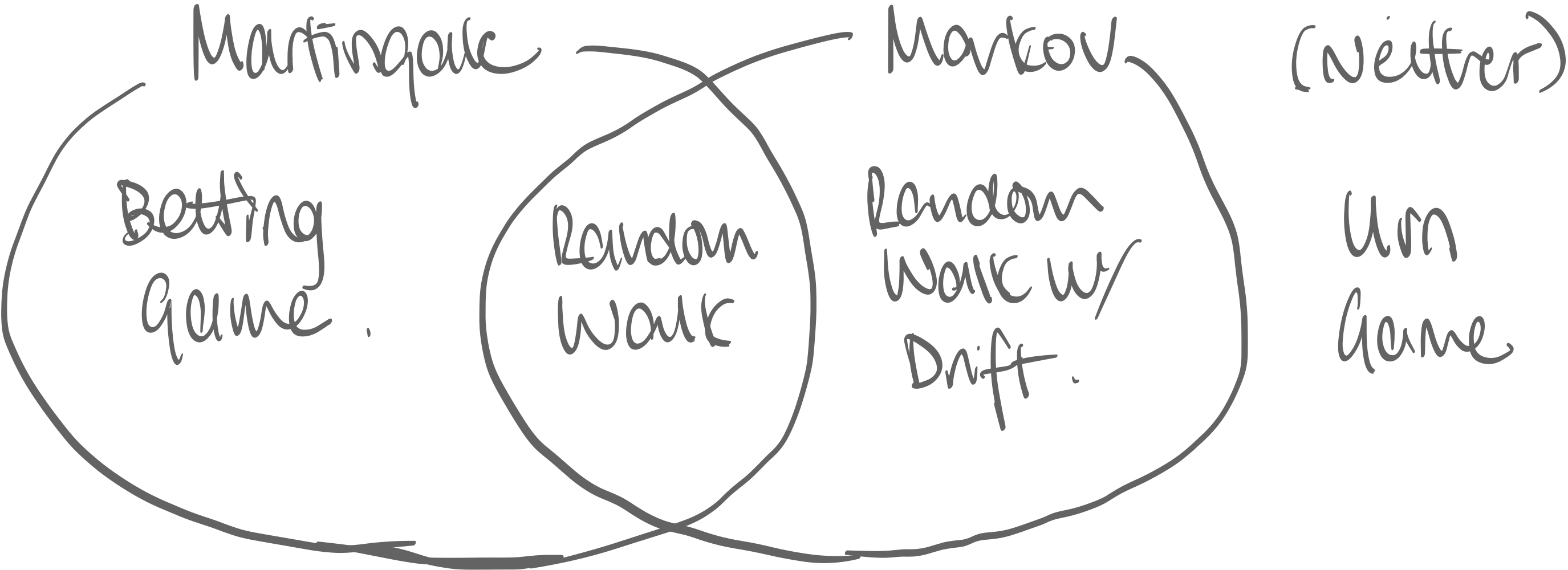

Examples. Consider these four games:

Urn Game

Example. Two red balls and two black balls are in an urn. You pick a ball without replacement for four times. where is if -th pick is red, and if black.

Not Markov

but

Thus , meaning their distributions are not the same, and thus not path agnostic.

Not Martingale

but

Thus trivially not martingale.

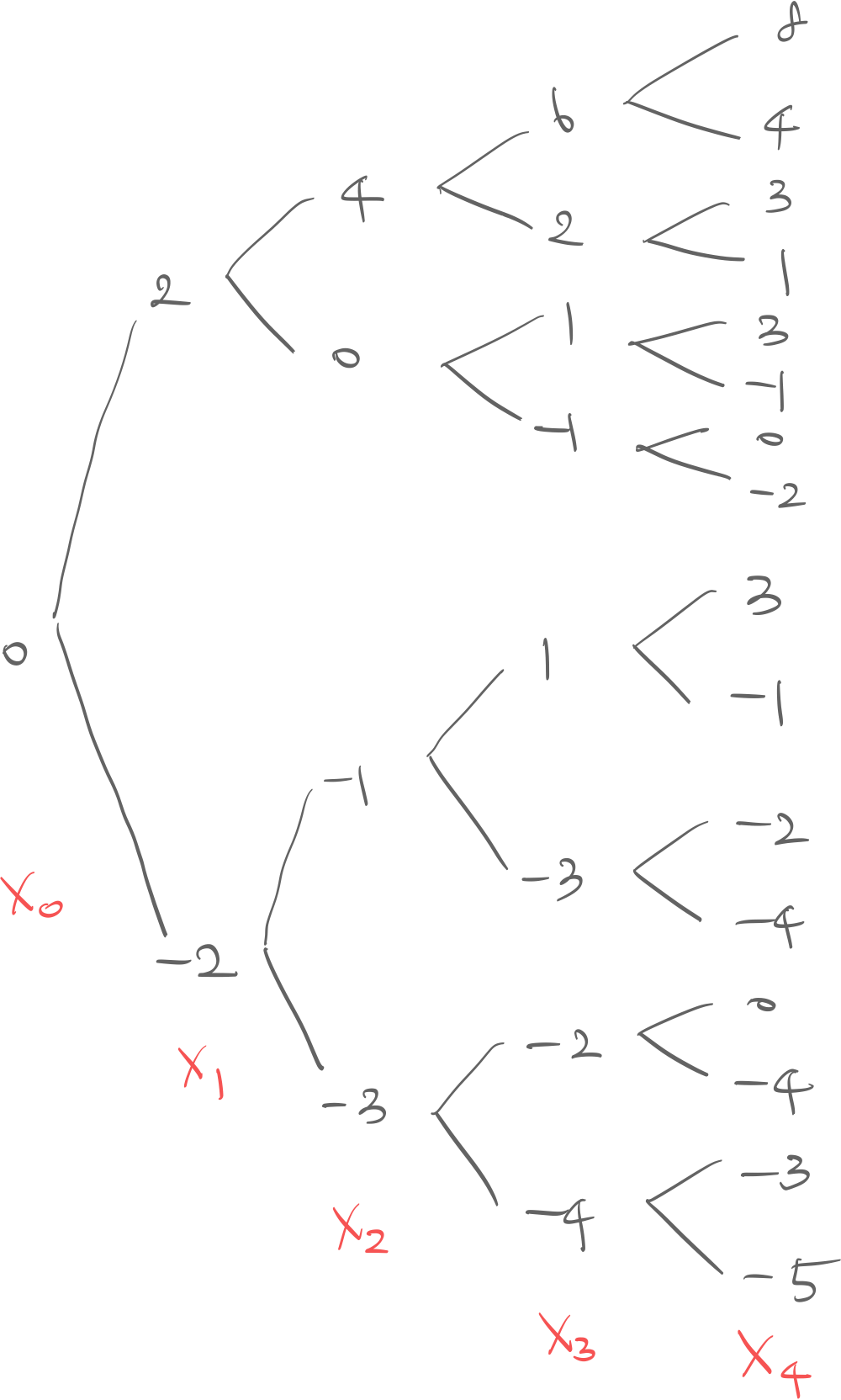

Betting Game

Example. Player wins or loses on each round , but depending on if they won or lost the previous round they bet more or less, and thus on this round the payoff changes.

where

Not Markov

Consider the game tree. Simply observe the distribution of and see how well it predicts :

- has cases for each path

- has cases since and both equal

Is Martingale

since because

Random Walk with Drift

Example. Consider process where:

Is Markov

Isn’t Martingale

but

each case is off by due to drift.

-

are identically distributed.: Stationary

-

then: Martingale

-

: Markov

Strong Markov Property

Motivation. Consider the following special Markov Chain with just two states, 0,1 and deterministic transitions:

- If at , next step is

- If at , next step is except: - on the first time it arrives, it stays there for one more timestep. Thus discrete process has distribution:

This is standard markov, because only considers , the current step. But doesn’t it feel weird that the process depends on what timestep it is on? Shouldn’t a true memorlyless process be independent even of what time it is? def. Stopping Time. Let filtration adapted to process . A random variable is a stopping time wrt iff:

Intutition. Consider stopping time of the first time process its state , ie . Then , i.e. at time step filtration is enough to show it’s time to stop. Example.

- Valid: “Stop after exactly 10 iterations”, “Stop the first time state is 10”

- Invalid: “Stop at the biggest value ever” (Impossible), “Stop when the next state is 10” (Impossible) def. Strong Markov. Let be some stopping time of your choice on process Then has the strong markov property iff for :

Intuition. Like in the motivation section, the distribution of at any timepoint should be independent of the timestep it is in.

Weiner Process

Standard Weiner Process

Motivation. Assume there are random variables as:

- let be a stochastic process such that:

Then we get the Weiner process as:

def. Brownian Motion. (=Weiner process) (written as ) is a set of random variables continuous-time indexed and has the following properties:

- Is a continuous process

-

- Thus,

- Any interval and where and do not overlap is independent. Notation wise, we think of , i.e. a set of random variables indexed by .

Remark. Brownian Motion can also be defined as an Ito Process that satisfies the following stochastic differential equation:

Trivially, in the case of standard brownian motion, Thus .

Properties. Brownian Motion satisfies the following properties:

- Martingale Property: Brownian motion is martingale: where

- Markov Property:

- is independent of and…

- Alternatively: where any combination

- Scaling Invariance: If is a brownian motion, then is also a brownian motion

- Quadratic Variation property:

With Scale and Drift

def. Weiner Process with Drift and Scaling (WPDS). Such is a Weiner process that has the following properties:

- Is a continuous process

- Any interval and where and do not overlap is independent.

- Properties:

- : drift; the higher, the more it climbs

- : scaling; the higher, the more volatile (See y-axis:)

- : drift; the higher, the more it climbs

thm. Standard WP to Scale and Drift. Given:

- is a standard Weiner process

- is a Weiner process with drift and scaling , with initial value

- ⇒ Then the following relationship holds (two equivalent definitions)

Geometric Brownian Motion

thm. Geometric Brownian Motion. Given is a WPDS then the following is a geometric brownian motion with initial value : (two equivalent definitions)

Geometric to WPSD

The following is equivalent:

Properties of Stochastic Processes

def. Adapted process. A stochastic process is adapted to filtration set if is a -measurable function.

Intuition. Recall that a sigma algebra can be thought of as “resolution of information”. is realized and its information is . The series of filtrations correspond to the series of random variables ; for each timestep, the information gets higher and higher resolution.

Martingale Process

Motivation. Many stochastic processes, including the standard brownian motion has the property that you can’t predict future trajectory based on information from its past trajectory. We formalize this as a martingale.

def. Martingale. A stochastic process on a filtered probability space is a martingale w.r.t. its adapted filtration if:

This is equivalent to saying

- by taking expectation on both sides of above

-

- This is a simple proof that brownian motion is a martingale;

Quadratic Variation

Motivation. Quadratic variation is not about variation of probability distributions. It’s a way to measure how “shaky” a function (in this case, a Brownian motion) is in a given interval.

def. Quadratic Variation. For a stochastic process , its quadratic variation on interval , for , is: